Design for Scale

Oct 2024

I gave this talk at the October 29 DesignX Design for Scale event.

Hi folks! Today we’re talking about Design for Scale! Interesting! When we say ‘Design for Scale’ … what do we mean? What IS scale?

I think what we really mean is: Design for predictable outcomes. Most customers aren’t try to solve for scale – that’s not their problem, that’s actually your problem. They assume that your product will scale, that’s table stakes. But what they’re shopping for, and what I care about for our customers is that our product gives them good, repeatable outcomes every single time – that’s what they’re paying for. As you might know, AI makes repeatable somewhat difficult.

Hey! I’m Tom Creighton, I’m a principal designer at Ada here in Toronto. Ada uses AI to automate customer service – we’ve powered more than 4 billion automated customer interactions so you could say we’re familiar with scale. We’ve been working on ‘machine intelligence’ problems since before LLMs, before OpenAI and ChatGPT – but particularly within the last year and a half or so, especially since LLMs arrived at scale themselves – we’ve been going deep on how to design well with AI.

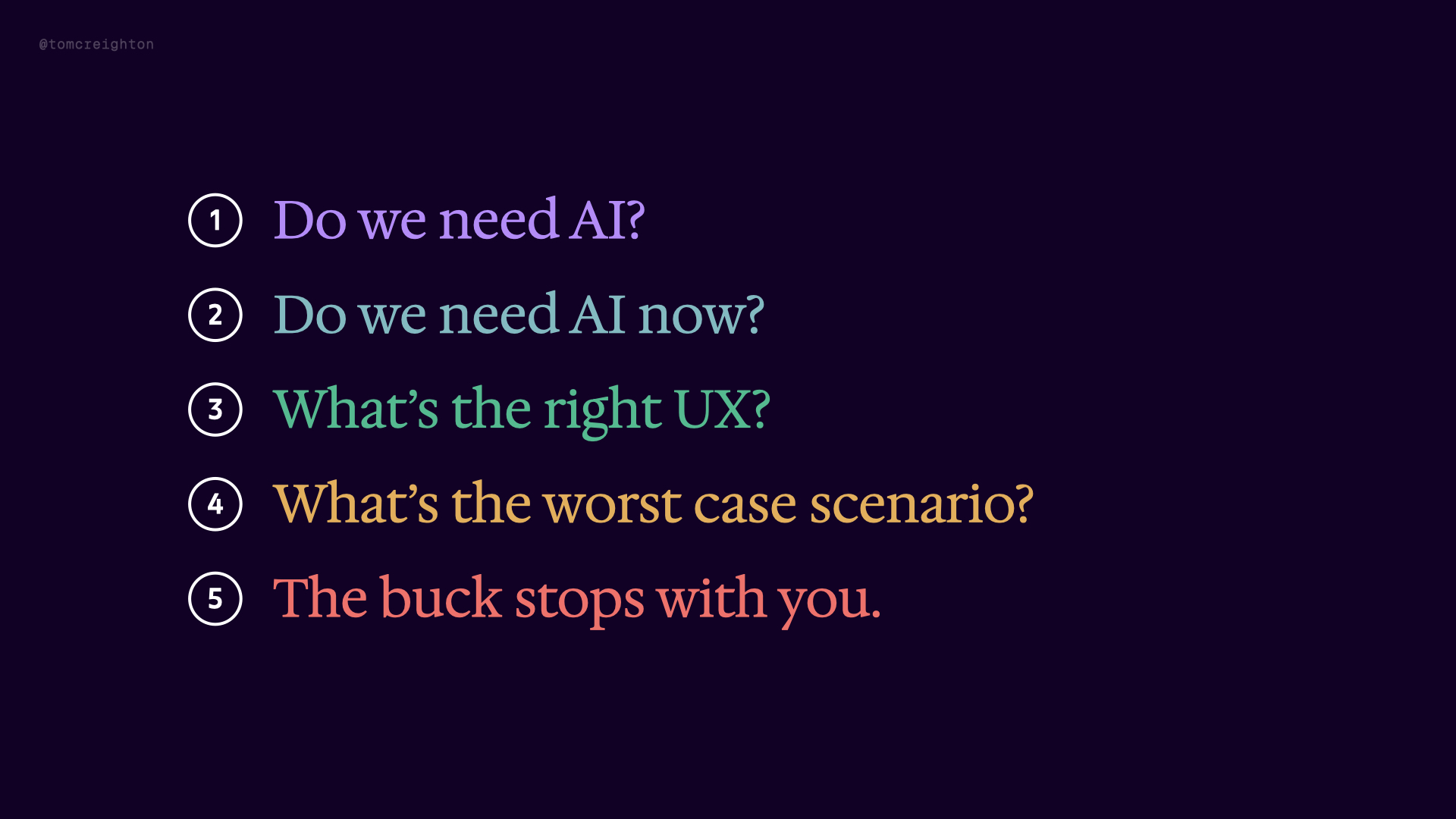

It will not surprise you to learn that there are hurdles to clear to make good AI decisions, especially at scale. At scale, every bad decision compounds. It’s really easy to make bad decisions around AI, because it doesn’t behave how we think software should behave! We invented computers that are kind of bad at their jobs! As designers, as PMs, as engineers – as product people – we need to rebuild our intuition around how to create software that drives predictable, repeatable outcomes. So I wanted to give you five questions that I think will help you make better decisions and drive better outcomes about designing for – and with – AI at scale. I’m not going to tell you specifically what to do because everything is contextual, but I’m going to help you know how to know what to do.

First: does this even need to be AI? The most basic question we could possible ask about using AI – should we? Can we reach the same outcome without AI, or would a simpler method suffice? Or put another way: Is AI doing anything unique for us that other solutions can’t achieve? What can I do with AI that I couldn’t do before? How can we ensure that AI adds tangible benefits to our product? The beginning of every conversation about AI at scale should start here: evaluate whether AI is the right tool or if another technology can address the problem more effectively. For all its benefits – AI is expensive, AI is slow, AI adds latency and error and uncertainty to our product. That’s a big tradeoff you’re making.

We’ve established that we have a good use case for AI. Cool. Is it a good use case right now? AI isn’t great at everything, but it’s become better at a lot of things, and it’s developing FAST. There are a lot of use cases that were pretty intractable with GPT3 that GPT4 just blows away, no problem So secondly: are we trying to solve an AI problem with technology that just isn’t ready yet? Can we pump the brakes until things move from possible to plausible. And: will this problem that we’re trying to solve just be abstracted away as AI improves? Is this a “human in the loop” problem for current AI that will be handled entirely autonomously by future AI? Is that worth waiting for?

You need to assess whether a delay is beneficial, allowing the technology to mature before committing to AI-driven solutions. It’s also a business consideration: how should all of us adapt our approach as AI technology advances, and when should we pause until it’s ready for specific tasks.

Also: when should we try to engineer a solution that might be very bleeding edge and assume that the tech will catch up? Design needs to account for current limitations and manage expectations – there’s still a gap between what’s possible and what’s repeatable and reliable. On the flipside: maybe we definitely need AI right now. But we then need to avoid overcommitting to technology that might soon be obsolete – consider your project goals relative to AI’s current and future capabilities.

All right, now we’re getting into the meat and potatoes stuff! Decide where on the spectrum your system should land – from fully autonomous to highly guided, open-ended AI vs. ‘traditional product design’. All the knobs & levers on the dashboard vs. full-self-driving. What are our options for balancing AI CONTROL with user CONTROL – where are we putting transparency? What parts of the machinery do we actually want to expose?

Think about what you are exposing as points of friction – whether that’s intentional or positive friction, or unintentional speed bumps you’re throwing in front of your users. You’re really trading off magical-feeling interactions for predictable usability here. An open text box that says ‘ask anything’ … when it works … is going to beat the pants of a whole bunch of standard form controls. WHEN IT WORKS. We have many years of established standards for traditional product design. We have emerging patterns – patterns, not standards – for AI interactions. It’s up to you to move to the right position on this spectrum. If your product needs to feel magical, push the knob to full magic – but know what you’re getting into. Which brings us to …

We categorically can not corral every possible error mode of the AI. So: How can we design systems to gracefully handle AI errors or hallucinations? Well, first – what position are you in? Is this “mission critical, red alert” or “actually it’s fine if the AI drops an F-bomb”? Determine these pain points for your business – what needs to work every single time and what has wiggle room? This is a business problem as much as a design problem: we have limited time, limited bandwidth, limited spend – where’s the best place to build guardrails? Working with AI means you will always be fighting imperfections – where will this hurt you the least?

On top of that – there are more technical failure modes to consider: If you treat all information the same, whether it’s from the customer, from the AI, or from third-party systems – that is, if you haven’t established tiers of trustworthiness, your entire system will become untrustworthy quite quickly. You need to understand provenance. There’s also the failure mode where your AI provider just doesn’t work. What then? If your entire product is a wrapper around the OpenAI API, what happens when they go down?

AND on top of that, there aren’t just worst-case scenarios from AI, but from users too! Users will inevitably try to use the tools you give them to do things you never intended for them to do. On a happy path, this gives you lots of interesting ideas for further development and productization. On the unhappy path, your users have – willingly or unwillingly – just prompt-injected your product, and now it’s inventing cool new offers for your product that don’t exist.

Building AI at scale is pre-gaming as many failure modes as possible and understanding where to put your bumpers.

Surprise! This isn’t a question at all! The buck stops with you. This is true of any design but it’s especially true of design with AI What responsibilities do we have as designers to ensure that AI output reflects our values and intentions, Reflects your business’s goals, your business’s values – and your values, your intent as a designer, as a human.

AI lacks any internal perspective or ethical framework, so human oversight – your oversight – is necessary to ensure possible outputs align with your intended outcomes. It’s very, very easy and tempting to ship raw AI outputs right to the user, but that’s not always going to produce good outcomes, and won’t produce predictable outcomes. You need the oversight, you need to understand the behaviours of the system. AI is another TOOL you can deploy to solve problems, not a silver bullet.

LLMs are not making value judgments on whether their output is useful, or truthful, or correct. They can’t. Maybe you’ve seen that image of an IBM slide from 1979 – “A computer cannot be held accountable, therefore a computer must never make a management decision.” That means YOU have to be the adult in the room and you have to be the wizard of oz managing things behind the curtain. You need to make the important decisions about business logic, about bias, about morality, because otherwise your product is at the mercy of the biases and presuppositions baked into the LLM’s training.

So here’s everything – I want to point out that These are sequential gates for decision-making. Determining your answer for each question moves you onto the next. It’s an escalating series of challenges to reach reliability and repeatablity. If you consider these as you’re shaping and shipping new products and new features, I think you’ll find yourself with better and more thoughtful approaches – for AI, for scale, for predictable outcomes.